On context rot and context engineering

Researchers prove model performance degrades with long inputs, but we're still not sure why.

When an article trends on the front page of Hacker News for a day or longer, it generally means the article is noteworthy: a new product release, an important discovery, an article about something controversial, or a topic that fosters contentious conversations between participants. I was surprised two days ago to see a post from Chroma stay on the front page for the majority of the day: “Context Rot: How increasing input tokens impacts LLM performance”.

I wasn’t surprised because the article wasn’t good (it was very well researched and written by the Chroma research team), I was surprised because something was trending that I thought was common knowledge amongst the Hacker News crowd. The premise of the article is that an LLM’s performance degrades the longer a conversation goes on. I’ve used LLMs extensively over the last 2+ years for coding, writing, researching, and planning, and I am all too familiar with their performance declining the longer my chats become. Responses start to lose focus on the original problem, and accuracy starts to slip. This is especially noticeable when I’m using LLMs to code; the quality of code and the model’s ability to understand what I want it to do drop dramatically.

The fact that the article stayed in such a prominent position all day tells me I’ve been taking something for granted that may be less obvious to those who haven’t had the same experiences as me. And so, I think the concept of “context rot” is worth explaining in more detail here.

A brief primer on context

As you have a conversation with an LLM, your questions, along with the model’s responses, add to a “context window”. This allows the LLM to use the history of the conversation to provide relevant responses to your queries. Each time you respond to the model, the context accumulates. If you start a new chat, the context window resets, giving you a fresh start1.

As of this writing, most state-of-the-art LLMs have relatively large context windows, ranging from hundreds of thousands of “tokens”2 — which are words or parts of words — to millions of tokens. Google’s Gemini 2.0 Pro has a context window of two million tokens, enough to hold the content of roughly 16 average-length English novels. But as you’ll see from Chroma’s experiments, loading that much data into a model’s context could mean you’ll have a harder time getting the results you want out of it.

Finding needles in a haystack

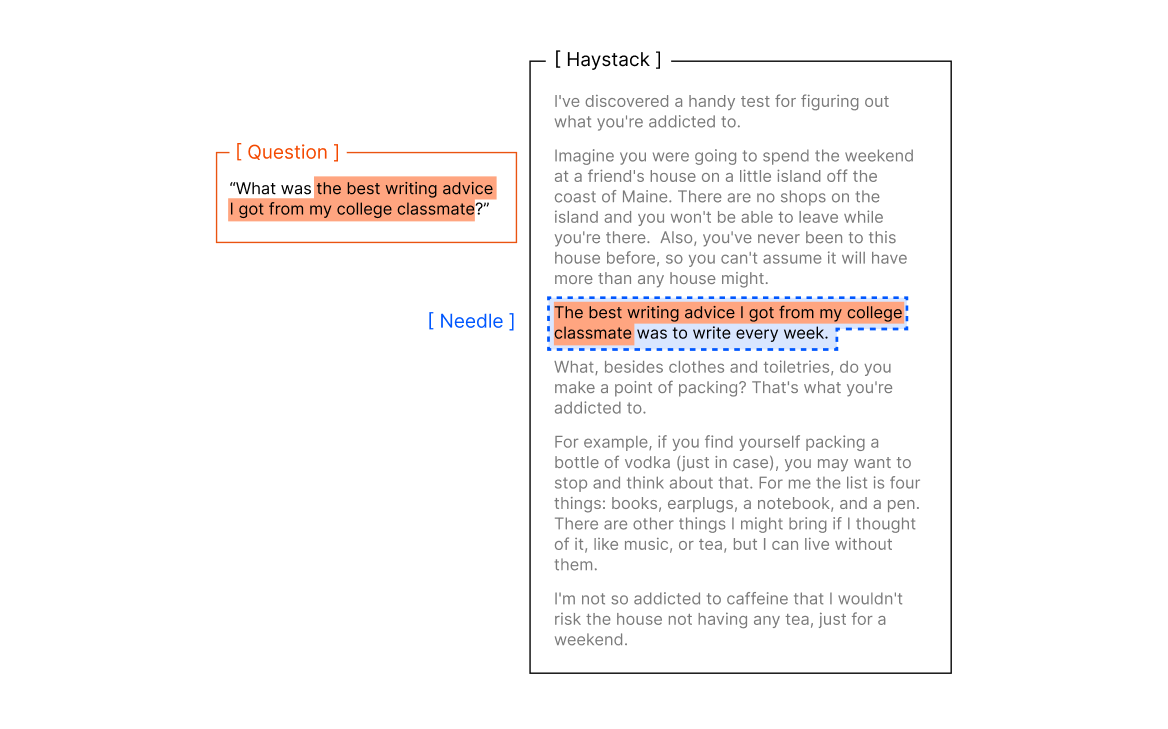

What Chroma’s team quantified in their research is the anecdotal stories engineers like me have known for some time: the more data you throw into context, the harder it is for the LLM to give you accurate responses about that data. The problem is characterized by AI researchers as a “needle-in-a-haystack” (NIAH) problem, which places a random fact (the “needle”) somewhere into a context window (or, the “haystack”), then asking the model a question about the fact. The larger the haystack, the harder it is to find the needle. In benchmark studies with NIAH datasets, LLMs can often times “cheat” the test by looking for literal matches of the needle in a haystack, which works well for questions like the one below:

The needle is relatively easy to find in a haystack when it has a lexical similarity to the question you as the chatbot3. But, what about finding answers that aren’t so literal? A team at Adobe decided the NIAH benchmark was skewed too-heavily towards lexical similarities, and released a benchmark designed to have minimal lexical overlap between the question and the needle:

…in these benchmarks, models can exploit existing literal matches between the needle and haystack to simplify the task. To address this, we introduce NoLiMa, a benchmark extending NIAH with a carefully designed needle set, where questions and needles have minimal lexical overlap, requiring models to infer latent associations to locate the needle within the haystack.

Using the question/needle pairs from the NoLiMa dataset, Chroma researchers evaluated how well the model responded. For example, asking the model a question about which book character from the dataset had “been to Helsinki” is a challenging question, because the NoLiMa dataset doesn’t explicitly have an answer to this question in its data. However, the data does refer to a character who “lives next to the Kiasma museum,” which is in Helsinki. The model would have to have knowledge of the world to make that association, and then associate that with a character. So the needle in the haystack is the phrase “Actually, Yuki lives next to the Kiasma museum.”

NoLiMa not only helps test for non-lexical matching, but also challenges a model’s knowledge of the world. In fact, over 70% of the needle-question pairs in NoLiMa require outside knowledge, making this a better benchmark for testing abstract knowledge associations than a typical NIAH dataset that excels only with high lexical similarities to a user’s queries.

Experiments and results

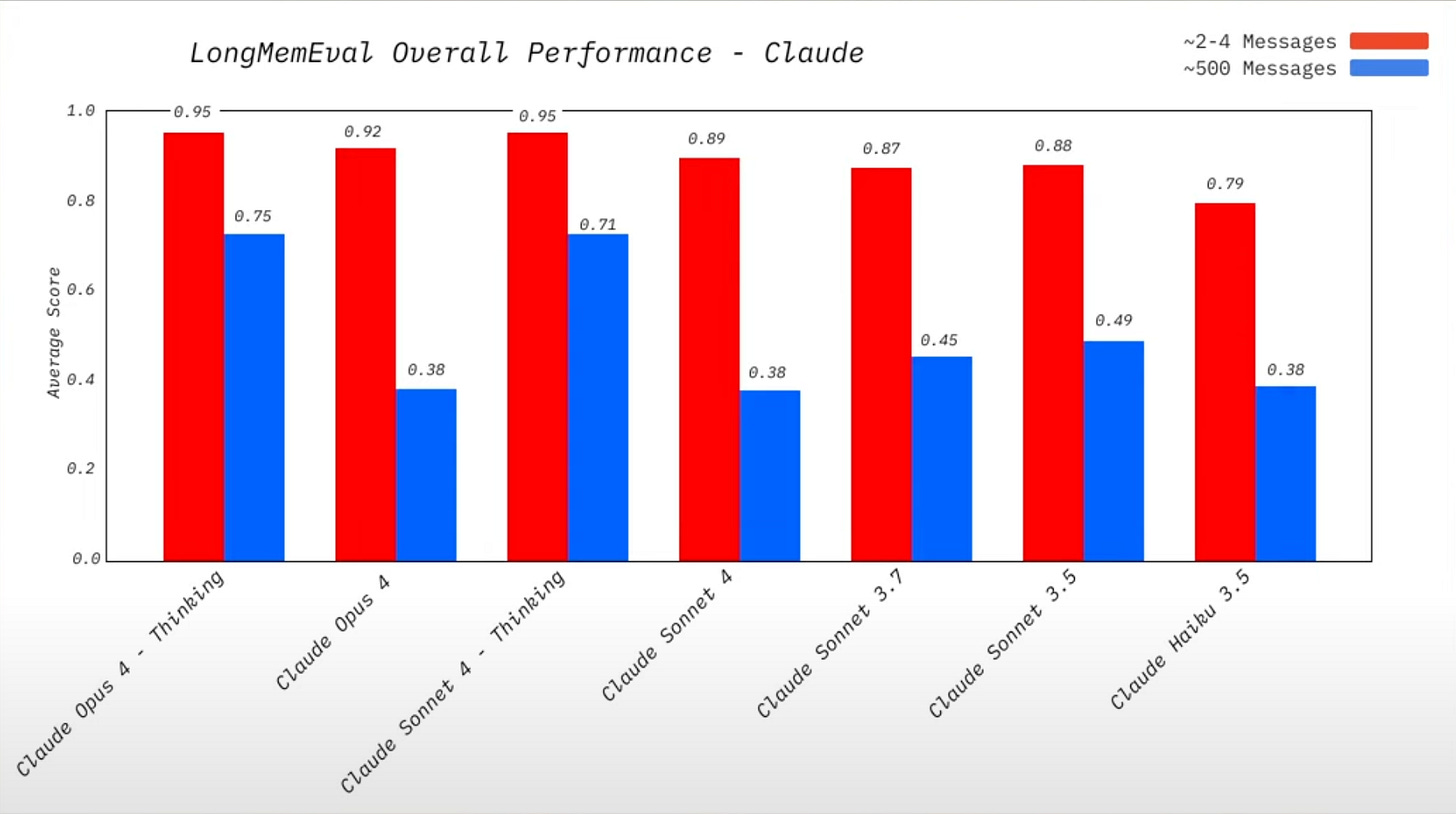

The Chroma team set up four controlled experiments to evaluate how context affects model responses. As you might expect, what they were able to prove is that a model degrades as the data in its context window fills up.

They also found that ambiguity in the input and distractors (text that might be topically related to the needle but doesn’t answer the question) can excacerbate the issue even further.

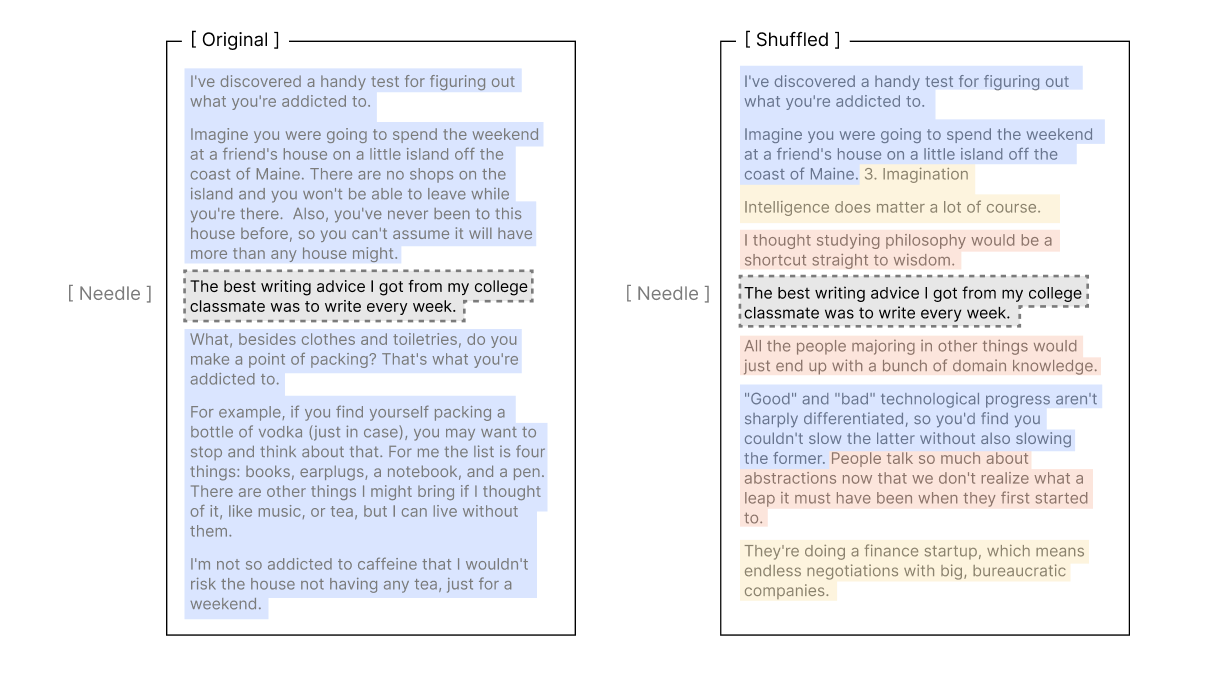

But what I found really interesting was a particularly creative experiment on the structural pattern of the haystack. They wanted to find out if models preferred context that was orderly with a natural flow of ideas, or if the models preferred a more haphazard shuffling of the context:

As it turns out, they found that structured and orderly context actually hurts model performance:

Although it seems counterintuitive, models perform worse when the haystack preserves a logical flow of ideas. Shuffling the haystack and removing local coherence consistently improves performance.

And although I haven’t tested this yet, I’m curious now to see if a context window full of code (rather than just narrative text) behaves similarly.

In summary: context engineering

I highly encourage you to read the original post to see the setup of these experiments and the results the team obtained. What I came away with is that there is work to be done in context engineering. The reason most of us fill up these context windows with many back-and-forth turns with the LLM is because we’re “getting somewhere”. We’ve made some progress on an idea, or getting close to an ah-ha moment in the conversation, and starting over with zero context feels like most of that momentum will be lost.

This is a familiar challenge for anyone who's spent time working with LLMs, especially if you’ve used them extensively for coding. Some chatbots offer a “compact” feature, which can compact your conversation into something more token-friendly, emptying the original context window and replacing it with the compacted version. If your chatbot doesn’t have a “compact” feature, you can also ask the chatbot to “summarize this conversation”, or instruct the chatbot to keep a high-level “changelog” of every major change it makes to your codebase. When you notice performance starting to degrade, you can simply start a new chat and include the summary and/or changelog as part of your initial input. These are just bandages on the problem and work remains to be done, but it’s good to see teams like Chroma putting out clear research with reproducible experiments.

Some chatbots, like ChatGPT and Claude, have a feature to remember details or personal preferences if you tell them to. Telling them to remember your birthday for example means they have access to that context even in new conversations.

You can experiment with Open AI’s tokenizer to learn more how words are tokenized: https://platform.openai.com/tokenizer

Lexical similarity measures how much overlap there is between the words in two texts. A score of 1 means the vocabularies completely overlap; a score of 0 means they share no words at all.